TECH TALK

24 Mar 2025

PIXOTOPE

Subscribe to CX E-News

ALTER YOUR REALITY THROUGH THE LENS

The team at leading visual distributor ULA Group are not one to rest on their laurels. In addition to distributing major entertainment and architectural lighting brands, they’re also big in LED display and processing. They’ve also got involved with Norway-based company Pixotope, known for cutting- edge solutions for extended and augmented reality for live broadcast.

Unlike most announcements that such-and-such distributor is now handling such-and-such a brand, this one deserves some explanation. That’s because Pixotope’s products are not only novel in what is still an emerging field, in some instances, they’re revolutionary.

With the exception of a couple of small sensors and accessories, all Pixotope products are software. Each is dedicated to performing some kind of virtual magic with video in real- time. You may have noticed some augmented reality in the recent Superbowl LIX broadcast – that was their software. Gigs don’t get much bigger than that.

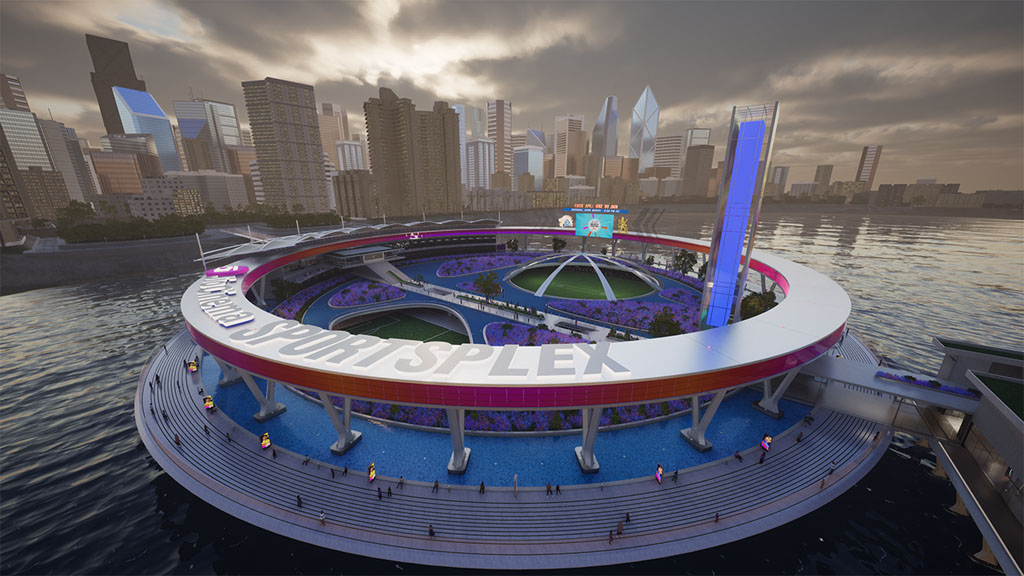

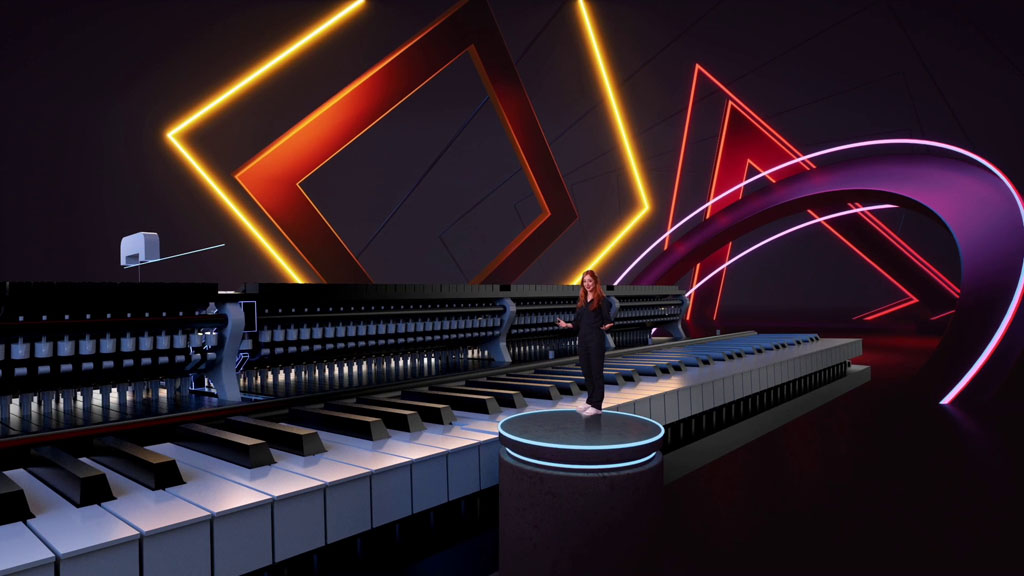

Pixotope’s range of software includes tools for VS/AR graphics in studio and live, talent tracking to insert real people into virtual sets, and to make them interact perfectly with digital elements. Their Living Photo software turns 2D video of people into 3D interactive assets that you can incorporate into live broadcasts. They offer both indoor and outdoor camera tracking, even through a phone app. Most jaw-dropping is camera tracking Pixotope Fly, which uses their amazing ‘Through-the-Lens’ video tracking to place moving, interactive digital elements into live video from drones, fly cams, or Steadicams.

I talked with the Senior Vice President, Product, Gideon Ferber from their office in London, where they’ve been making huge inroads into Europe’s broadcast and production industries.

“We started as a production company in 2013,” begins Gideon. “We were called The Future Group and we were working on a Norwegian TV game show called ‘Lost in Time’. It was technically groundbreaking. The whole show was filmed in a green screen studio with tracked props in a virtual environment. We were doing everything inside of Epic Game’s Unreal Engine. The contestants competed with physical props. If they needed to pull a lever, there was an actual lever that was encoded, and every action they took in real life translated to an action in the virtual world.”

This was in 2017, two years before Disney’s The Mandalorian would make Unreal Engine synonymous with virtual production.

“We used Unreal Engine because visually, there was no comparison to any other product,” elaborates Gideon. “But back then, Unreal Engine didn’t have features for broadcast. We were the first to introduce SDI in and out, lens calibration, genlock, and time code. In 2019, out of all of that innovation came Pixotope, taking all those components and building a product. We realised we had a really good ecosystem that can be used for broadcast that is super stable, powerful visually, and with fidelity like no other.”

Today, this means Pixotope are running a customised version of Unreal Engine with their own modules built around it. “Other than the design and rendering tools, we are not using any of Epic Game’s components,” confirms Gideon. “We are in full control of our own video pipeline from the first frame hitting the engine until it leaves; it’s all our own code.

The same goes for tracking, lens calibration and, and every aspect of the engine. It just gives us more control, and we can guarantee our support level to our customers. As you mentioned, we did the Superbowl for the sixth time in a row, this year with Fox. If something was not right and they had encountered a bug, we know each line of code. If anything is broken, we can fix it, and this way, we can guarantee that a mission-critical on-air show is always running.”

Pixotope made a decision early on that they would not be building their own black boxes to run their products; all the major products are licensed software. “We want to deal with hardware as little as we possibly can,” Gideon says. “It gives us flexibility. It means we can upgrade faster; we can release fixes faster.

We can come up with crazy ideas and just give them a try. For example, we recently launched Reveal, our AI-powered background segmentation tool. Quite a few people have tried to build something like it, but no one has succeeded so far. Because we don’t have any proprietary hardware, we were free to just try. Being software-only also gives us the ability to be more flexible with our licenses; all the licenses are cloud-based, and customers can reassign them between different users. They’re not tied to a machine, or a dongle. It just makes life a lot easier.”

Pixotope’s talent tracking is all happening using nothing but the video coming from cameras – there’s no physical reference points, dots, markers, or sensors. I asked Gideon how on earth that is possible. “We do it two ways,” he divulged. “One is with Body Pose Estimation (BPE), which is a feature from Nvidia that runs in their graphics cards. Purely from the video, it analyses the silhouette and generates a wire-frame skeleton. The second option is more robust and offers greater flexibility, which is why we refer to it as TalenTrack. TalenTrack works on multiple cameras, with small sensor cameras that you can mount anywhere, and they create a tracked volume. Within that volume, characters will be tracked. You can expand it to as many cameras as you want. If I wanted to track a corridor, then I could put 20 cameras in a row; there is no real limitation.

It generates a wire-frame skeleton from the image, does the triangulation between the cameras, and builds the 3D skeleton for up to five characters at once. It doesn’t care where the main camera is, because it’s a standalone system.”

One of the most impressive bits of tech in their arsenal is Fly, enabling epic XR and AR effects with video that is literally flying. “The ‘Through-the-Lens’ technology that runs Fly is from a German company called TrackMan that we acquired in 2022,” Gideon outlines.

“It’s absolutely mind-blowing! It works by taking the frame and analysing it for contrast points. Although it was initially designed for drone cameras, we have customers using it exclusively in studios. It doesn’t require encoders, markers, or any additional equipment on the camera, meaning there’s no added weight for the camera operator. It’s purely analysing the video, and if the studio has enough clear contrast points that you can track, it works like magic.”

There are still some technical hurdles to overcome, and Pixotope is making successful progress in addressing them.

“One of the latest features we added to Fly was support for zoom,” Gideon continues. “Doing it purely on video is difficult, because the minute you start zooming in, you’re losing your points of contrast. You need to know the environment really well to be able to do any level of zoom.

The way our solution works is that you build your cloud of contrast points ahead of time. During production, you can then zoom inside that area. We did some shooting in Oslo a couple of weeks ago at a ski jump, testing our own boundaries, and to see if it works with snow. How do you track white against white? Do you have enough information? The Winter Olympics are coming next year, and we want to make sure we have something cool for them. We did exactly that, and it worked really well! As long as you have some elements in the scene that are constant, you’re golden!”

Main Photo: Virtual studio for Viacom18. Credit to Sequin AR and Viacom18

Subscribe

Published monthly since 1991, our famous AV industry magazine is free for download or pay for print. Subscribers also receive CX News, our free weekly email with the latest industry news and jobs.