News

27 Aug 2019

Video File Formats, Containers and Codecs

Subscribe to CX E-News

VIDEO

Video File Formats, Containers and Codecs.

Yes… it’s complicated

by Simon Byrne.

Video is a huge part of events, so reliable playback of video files is essential. Enter Formats, Containers and Codecs.

High definition, high quality uncompressed video file sizes are massive, about 650 gigabytes per hour. That is more than 10 gigabytes a minute! This is unmanageable for most applications because it is costly and impractical to store that amount of data. Also, the bandwidth that is required to transport and stream those file sizes is insane.

A way to compress the data is required. Enter the Codec.

Codec is short for Coder Decoder. It is software or a device that takes uncompressed video data (which we know is huge) and makes it small enough so that it is manageable. As technology evolves, new codecs are always being developed for better file size, quality and playback.

No one codec does all jobs well. Therefore they are designed with an intent. That is, what is its primary goal? Is it for acquisition, editing, delivery, or display?

All of these uses have different needs which in part, explains why there are so many different codecs. Some are proprietary, which product manufacturers must pay a fee to use (such as all the Mpeg variants), and others are open source.

Obviously the more the video is compressed using lossy techniques, the more data is thrown away. Also, the more efficient a codec is, the more processing is required to encode, and decode. These competing requirements must be balanced.

For acquisition, you need quality because it is the master footage. Because the storage is local, reasonably large file sizes are okay, however you don’t want much processing which could impact on the speed at which the data is written to the disk, and the hardware needs to be capable of writing large amounts of data in real time.

For delivery, small file size is the major consideration. Consumer level storage needs to be cheap and therefore quite a bit smaller with low bandwidth. At this level, codecs are highly compressed and employ lots of tricks such as Interframe coding.

Interframe coding encodes differences between frames rather than each full frame. Interframe coding provides substantial compression because in many motion sequences, only a small percentage of the pixels are actually different from one frame to the next.

The amount of compression does however depend entirely on the content.

This is a great way to save data, but Interframe coded material really bogs down editing software because it needs to read and process several frames to get the required data before it can display a single frame.

For editing, minimal decoding is preferred so that the computer resources are not tied up in just randomly accessing the footage (as you do in editing), but is available for the editing itself.

Large, lightly compressed files are usually used for editing. As an alternative, to make things work more smoothly, editors will often render low resolution, duplicate copies of the master files.

These lighter weight “proxy” files are used for the edit, then when the final edit is rendered, the master files are swapped back in, thereby maintaining quality, but with the ease of working with smaller files.

Some special case codecs support a fourth channel (on top of red, green and blue) – the Alpha channel. The Alpha channel is transparency which means it can be overlaid on other content. For example, lower third graphics.

It is for these competing reasons that lots of different codecs exist.

There are literally hundreds of different codec combinations. People often associate the file extension as the codec, but that is not quite correct. A file format is a standardised set of rules for storing the codec information, metadata and folder structure, a container if you like.

Described by its extension, such as MP4, MOV or WMV, it is a container which incorporates all the files required for playback, the video stream, audio stream and the metadata. For example MP4 files can be put together using different codecs which means, a device might be able to playback one MP4 file, but not another.

The most common file format for playback is MP4 using a H.264 codec for vision and AAC codec for the sound. Sometimes called AVC (Advanced Video Coding) and Mpeg-4, the H.264 codec is extremely well supported by most devices.

H.264 is a popular standard for high definition digital video, and for good reason. A codec based on the H.264 standard compresses video to roughly half the space of MPEG-2 (the old DVD standard) to deliver the same high-quality video.

This means you can enjoy HD video without sacrificing speed or performance.

H.265 (HEVC High Efficiency Video Coding) is the new version of H.264. It has twice the compression of H.264 which is superb for bandwidth and storage reasons. However, it needs triple the resources to encode

and decode. At this stage it is not widely supported but will no doubt gain traction.

There are other factors that affects video quality in a file too:

Resolution – obviously the less pixels there are, the less resolution there is to see. However, I see lots of gigs that run at ultra high resolution for no noticeable benefit. All you are doing in that situation is pushing more bandwidth through your systems which opens yourself up to more likely failures. For most standard screen jobs, 1280 by 720 is just fine. For example, a 1280 by 720 image requires less data than a 1920 by 1080 image (2.25 times less).

Bitrate – bitrate is the amount of data per second. It is a setting used when encoding. A lower bitrate encode will produce a smaller file, but at lower quality.

Colour Bit Depth – determines the maximum amount of colours that can be displayed. 8-bit displays 256 shades for each colour. This still delivers 16.7 million colours (which is 256 to the power of 3) but explains why you can sometime see banding on Blu Ray discs because they use 8-bit.

Most video is 8-bit. 10-bit is 1024 shades which produces over a billion different possible colours. 10-bit is important for colour grading in production, but not so much for delivery. Finally, 12-bit is a step up again, which is 4096 shades(theoretically capable of 68 billion different colours!) which is used in large-scale cinema production and projection.

Combined with higher resolution, the 12-bit colour means the ultimate experience on the big screen.

Chroma Sub Sampling is Bit Depth’s sidekick – chroma subsampling is a type of compression that reduces the colour information in a signal in favour of brightness, or luminance data.

The human eye notices more change in brightness than colour. This reduces bandwidth without significantly affecting picture quality. A video signal is split into the two different aspects: luminance information and colour information. Luminance, or luma for short, defines most of the picture since contrast is what forms the shapes that you see on the screen.

For example, a black and white image does not look less detailed than a colour picture. Colour information, chrominance, or simply chroma, is still important, but has less visual impact.

What chroma subsampling does is reduce the amount of colour information in the signal to allow more luminance data instead. This allows you to maintain picture clarity while effectively reducing the file size up to 50%.

In the common YUV sub sampling format, brightness is only 1/3rd of the signal, so reducing the amount of chroma data helps a lot.

Chroma sub sampling is denoted in the following way – 4:4:4 – Out of 4 pixels, no colour information lost (over the top, rarely used). 4:2:2 – Out of 4 pixels, 2 pixels get their colour information from the adjacent 2 pixels (used by professionals). 4:2:0 – Out of 4 pixels, 3 pixels get their colour information from the last remaining pixel (most common in delivery format).

HLS (Adaptive Bitrate Streaming) – Developed by Apple, HLS is a technology where different resolution and bitrate versions of a H.264 file are encoded (small to large) and the highest resolution is streamed until buffering occurs.

When that occurs, the player automatically switches to the next lower resolution/bitrate version. If that buffers, it will switch to the next and so on. HLS stream have .m3u8 extension.

So…what to use?

For most playback operations with the widest compatibility and MP4 file, with a H.264 Codec, 8-bit colour for the vision, and an AAC Codec for the audio is going to work. Bitrate depends on the resolution and frame rate, but a 1920 by 1080 pixel, 25 frames per second high definition video should have a bitrate of around 10 Mbits for the vision, and 384 Kbits for the audio.

Links:

VLC Open Source Media Player – Highly compatible media player which opens most file formats, streams and codecs (including HLS streams).

www.videolan.org/vlc/index.html

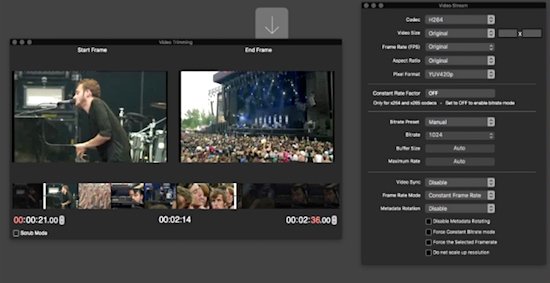

FF Works – Comprehensive media tool for macOS which is great for batch transcoding files into workable formats.

www.ffworks.net

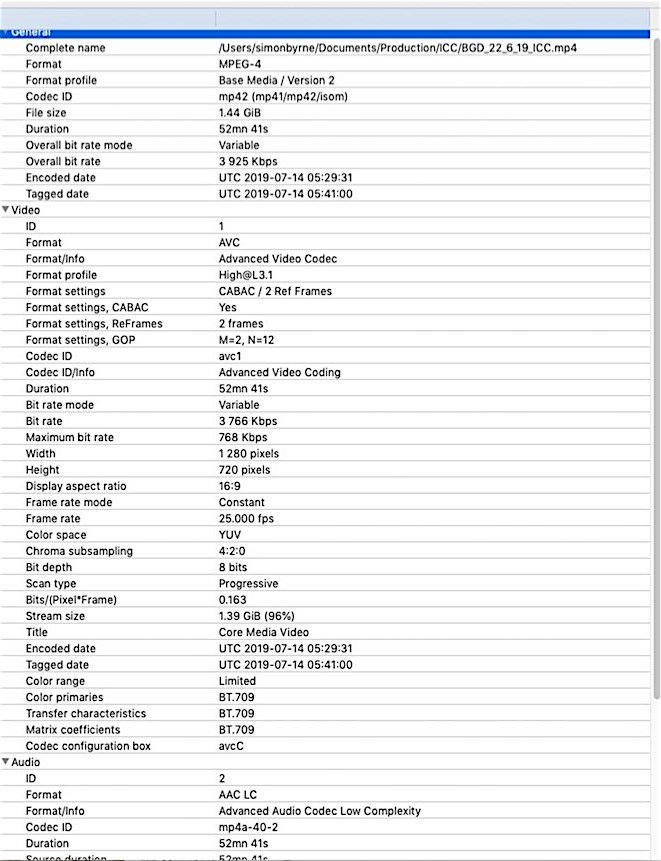

Mediainfo – Superb and lightweight application that tells you all the technical details of a media file including the codec, bitrate, bit depth, chroma subsampling and so on.

www.mediaarea.net/en/MediaInfo/Download

Comparison of File Sizes

www.digitalrebellion.com/webapps/videocalc

CX Magazine – August 2019 Entertainment technology news and issues for Australia and New Zealand – in print and free online www.cxnetwork.com.au

© CX Media

Lead image via FF Works

Subscribe

Published monthly since 1991, our famous AV industry magazine is free for download or pay for print. Subscribers also receive CX News, our free weekly email with the latest industry news and jobs.